The origin of C is on “big” machines, implementing unix, not 8/16 bit micros

C was first implemented on the PDP-11, a 16 bit machine (considered pretty normal at that time). That’s why it has the weird pre/post increment operators – they correspond directly to the PDP-11 instruction set (and VAX also, but that came later).

Airborne_Again wrote:

It is a fancy assembler

I think if you compare the executable size of a modern C compiler with a modern assembler, you’ll notice how far from reality this is. The heaviest part is probably the optimization engine.

Airborne_Again wrote:

If you use uint32_t instead and 32-bit constants then ~x==y would be true on an architecture with 32 bit ints, but not on one with 64 bits.

I think you misunderstand what guarantees C gives. C language has a standard (currently, C17) and under this standard uint32_t bit negation and checking for equality are well-defined regardless of the actual architecture.

Airborne_Again wrote:

Well, it would be 1. Again the underlaying hardware is exposed as the it doesn’t have a boolean data type.

CPUs have flags, which are essentially boolean (they can’t have any values than “set” or “unset”). == operator, in reality, will translate into something like “cmp+jne” (assuming no optimizations).

While C is close to the metal, calling it “fancy assembler” is reductio ad absurdum (you could call any imperative language, regardless of what datatypes it has, as “fancy assembler” if you want to go down that route, e.g. “Java is just fancy assembler for Java bytecode”).

(You can compare ~foo with bar like this successfully, by avoiding the implicit conversion to int and sign extension:

int main(int argc, char **argv) {

uint8_t foo=0×55;

uint8_t bar=0xaa;

}

gcc -o test test.c

./test

foo equals ~bar

by avoiding the implicit conversion to int

Sure; one could also do it by masking

if( (~foo & 0xFF ) == bar)

My point would be that this int promotion is not anything an assembler would do for you. If you did an invert on a byte [sub] register, you will get exactly that.

Or:

foo = ~foo;

if(foo == bar) printf(“foo equals ~bar\n”);

I don’t think this is anything to do with the “unasm likeness” of it, it’s just one of those quirky traps you end up with a language with such a long history. Just the fact that you have multiply, divide and float in C, and these (generally) still work fine on small CPU architectures without multiply instructions or an FPU shows it’s really not just “fancy asm”.

I confess I’ve often called C “assembler on steroids” but strictly tongue in cheek. I don’t think it’s worth getting into an argument, even a friendly one, about the precise semantics of what that means. To me it means things like having access to raw pointers, and the ability to shoot yourself comprehensively in any part of your anatomy with them.

johnh wrote:

I confess I’ve often called C “assembler on steroids” but strictly tongue in cheek. I don’t think it’s worth getting into an argument, even a friendly one, about the precise semantics of what that means. To me it means things like having access to raw pointers, and the ability to shoot yourself comprehensively in any part of your anatomy with them.

Of course the term “assembler” is not really important in this discussion. The important thing (and what started the discussion) is what constitutes a high-level language. To me that is a language which abstracts away from the underlaying hardware, something that C – on purpose – does not.

Interestingly, whoever wrote the Wikipedia article on high-level languages has the same view as I.

A key passage is this:

Examples of high-level programming languages in active use today include Python, Visual Basic, Delphi, Perl, PHP, ECMAScript, Ruby, C#, Java and many others.

The terms high-level and low-level are inherently relative. Some decades ago, the C language, and similar languages, were most often considered “high-level”, as it supported concepts such as expression evaluation, parameterised recursive functions, and data types and structures, while assembly language was considered “low-level”. Today, many programmers might refer to C as low-level, as it lacks a large runtime-system (no garbage collection, etc.), basically supports only scalar operations, and provides direct memory addressing. It, therefore, readily blends with assembly language and the machine level of CPUs and microcontrollers. Also, in the introduction chapter of The C Programming Language(second edition) by K&R, C is considered as a relatively “low level” language.

it’s just one of those quirky traps you end up with a language with such a long history.

I wonder when the integer promotion quirk came in. AIUI it is an attempt to optimise the language for micros which have “int” bigger than 8 or 16 bits. If it started on the PDP11 (int being 16 bits) then it may have been there from the start. The unfortunate thing is that, unlike asm which will go exactly what you type, C has a somewhat buggered support for types shorter than “int”, except in the amount of storage required (and even that may not be true for locals, given that lots of CPUs need word-aligned storage).

Just the fact that you have multiply, divide and float in C, and these (generally) still work fine on small CPU architectures without multiply instructions or an FPU shows it’s really not just “fancy asm”.

Yes that is what makes it so brilliant. And uint64_t, and double floats for unusual projects. I used 64 bit ints a lot for intermediate variables in ARINC429 code where you have to precisely control 32 bit under/overflow cases.

Of course the term “assembler” is not really important in this discussion

It came from C is little more than a portable assembler.

Examples of high-level programming languages in active use today include Python, Visual Basic, Delphi, Perl, PHP, ECMAScript, Ruby, C#, Java and many others.

~4 out of those 9 are basically dead languages today. Delphi has been dead for many years. Ruby peaked maybe 15 years ago and today is used by almost nobody other than £1000/day corporate coders, along with maintaining COBOL-66  ECMAScript? The wiki needs updating but really it was written from a particular perspective.

ECMAScript? The wiki needs updating but really it was written from a particular perspective.

no garbage collection

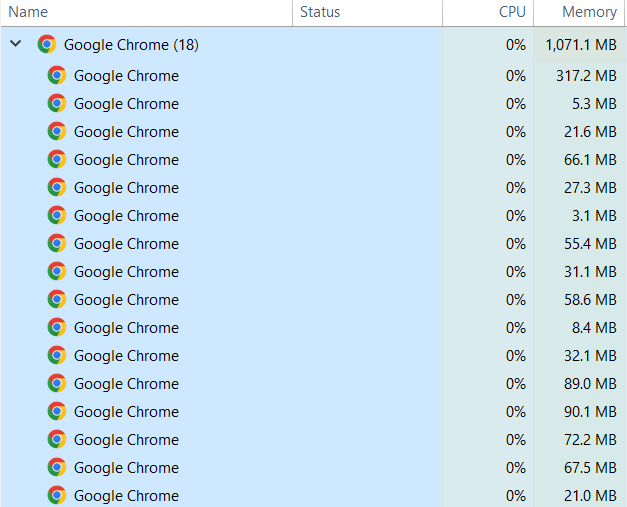

Garbage collection implies an extra level of indirection in memory access, which not only is a performance hit but suggests that the heap is used in a disorganised manner. That is OK on a PC with 24GB of RAM etc but is absolutely dangerous in any safety critical application. In fact a heap is a really bad idea in anything that has to work reliably unless a) it has such huge resources that nobody notices the memory usage b) every malloc() is followed by a 100% assured free() c) the heap is used for product options which once activated run until power-down (I use c) only). Like Chrome casually allocating memory; clearly there are way too many couches at Google HQ… the following is just a list of open tabs, so this is a completely grotesque use of memory

many programmers might refer to C as low-level

It is low level which makes it excellent for embedded work. For knocking out PC apps, almost everybody is using M$ VC++. For server-side programming, the fashion changes every few years, with PHP being popular as ever but universally derided if you are looking for peer group admiration because if you want to write crap code it is the server-side equivalent to C  Java today is the job protection equivalent of the Cisco Certified Engineer qualification

Java today is the job protection equivalent of the Cisco Certified Engineer qualification  Python is in fashion even for embedded but is not lightweight (it is normally an interpreter); I looked at putting it on a target I am working on now and it needed 50-100k of codespace and loads of RAM, and the perf hit is a factor of 10, like interpreted languages generally.

Python is in fashion even for embedded but is not lightweight (it is normally an interpreter); I looked at putting it on a target I am working on now and it needed 50-100k of codespace and loads of RAM, and the perf hit is a factor of 10, like interpreted languages generally.

Peter wrote:

Garbage collection implies an extra level of indirection in memory access, which not only is a performance hit

You might have missed out on what was known about “performance-safe” garbage collection already 40 years ago.

but suggests that the heap is used in a disorganised manner. That is OK on a PC with 24GB of RAM etc but is absolutely dangerous in any safety critical application.

The important difference is whether you know in advance the exact size of your data structures – not if they are allocated on the heap or on the stack or are statically allocated.

I wasn’t referring to the garbage collection delay.

Sure you have to know the data structures in advance. In critical systems, only statics are allowed… and for good reasons.